Naive Bayes is a simple yet highly effective classification algorithm. It relies on probability theory and frequently acts as a robust baseline for tasks in text classification, including spam detection, sentiment analysis, and document categorization. If you are interested in mastering such concepts, enrolling in an Artificial Intelligence Course in Trivandrum at FITA Academy can provide you with a solid foundation. This blog will explain what Naive Bayes is, how it works, and why it continues to be a popular choice in the data science community.

What is Naive Bayes?

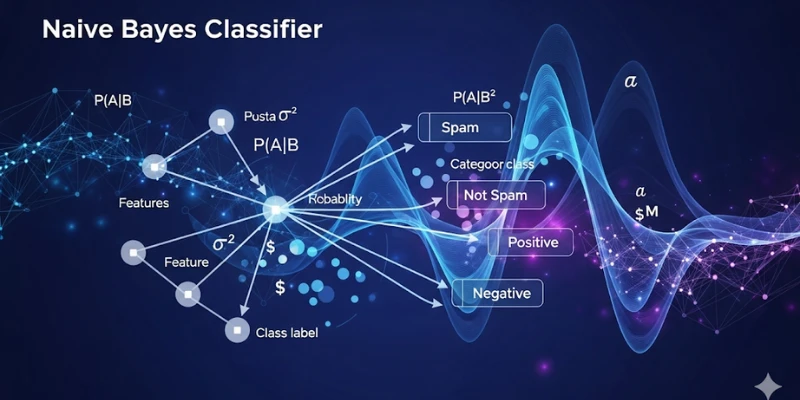

Bayes Theorem forms the basis for the Naive Bayes group of probabilistic classifiers. It forecasts the likelihood that a specific instance is a member of a particular class. Despite its name, the algorithm is not “naive” in performance but rather in its assumption. It assumes that the features used for classification are conditionally independent of each other, given the class label.

This assumption simplifies the model and makes it computationally efficient. In many real-world scenarios, the independence assumption does not fully hold, yet the algorithm still delivers accurate results.

Understanding Bayes’ Theorem

At the core of Naive Bayes is Bayes’ Theorem. It offers a mathematical structure for revising the likelihood of a hypothesis in light of new information. In classification tasks, the hypothesis refers to the class label, while the evidence pertains to the set of features.

Bayes’ Theorem states:

Probability of class given the data = (Probability of data given the class * Prior probability of the class) / Probability of the data

This approach allows Naive Bayes to calculate how likely a piece of data belongs to a particular class by evaluating how frequently features appear in that class. To gain deeper insights into such algorithms, consider signing up for an Artificial Intelligence Course in Kochi, where foundational concepts like these are thoroughly covered.

Types of Naive Bayes Classifiers

The Naive Bayes algorithm has multiple variants, each of which is appropriate for a particular kind of data:

- Gaussian Naive Bayes: Used when features follow a normal distribution.

- Multinomial Naive Bayes: Ideal for discrete data, especially word counts in text classification.

- Bernoulli Naive Bayes: Used for binary or boolean features, such as presence or absence of a word.

Choosing the right type depends on the nature of your data and the problem you aim to solve.

Applications of Naive Bayes

Naive Bayes is widely used in practical applications due to its simplicity and efficiency. Some of the most common use cases include:

- Email Spam Detection: By analyzing the frequency of words commonly found in spam emails, Naive Bayes can classify incoming messages as spam or not.

- Sentiment Analysis: It assists in determining if a review or social media comment is positive, negative, or neutral according to its content.

- Document Categorization: The algorithm is used to assign documents to topics or genres based on word patterns.

These applications benefit from Naive Bayes’ ability to handle high-dimensional data, especially in text-heavy domains. If you want to learn more about applying such techniques, an Artificial Intelligence Course in Pune can help you build practical skills in this area.

Advantages of Naive Bayes

One of the biggest advantages of Naive Bayes is its speed. It trains and predicts quickly, even with large datasets. It also performs well with relatively small amounts of training data. Moreover, it is less sensitive to irrelevant features, which helps improve accuracy in noisy datasets.

Another key strength is that Naive Bayes requires less computational power compared to more complex algorithms, making it a great choice for real-time applications.

Limitations to Consider

While Naive Bayes performs well in many scenarios, its main limitation lies in its strong assumption of feature independence. In reality, features are often correlated, and this can lead to less accurate predictions in some cases.

Also, if a category in the training data does not contain a certain feature, the algorithm may assign zero probability to it. This problem can be alleviated by employing methods such as smoothing.

Naive Bayes remains a popular classification technique because of its simplicity, interpretability, and efficiency. Although it relies on a simplifying assumption, it consistently delivers strong performance in many real-world problems, especially in text classification.

If you’re starting out in machine learning or working with large text datasets, Naive Bayes is an excellent algorithm to explore. An Artificial Intelligence Course in Chandigarh can offer thorough instruction and practical experience to help you grasp algorithms like Naive Bayes and others if you want to establish a solid foundation in AI principles.

Also check: How Transfer Learning Is Changing AI Models